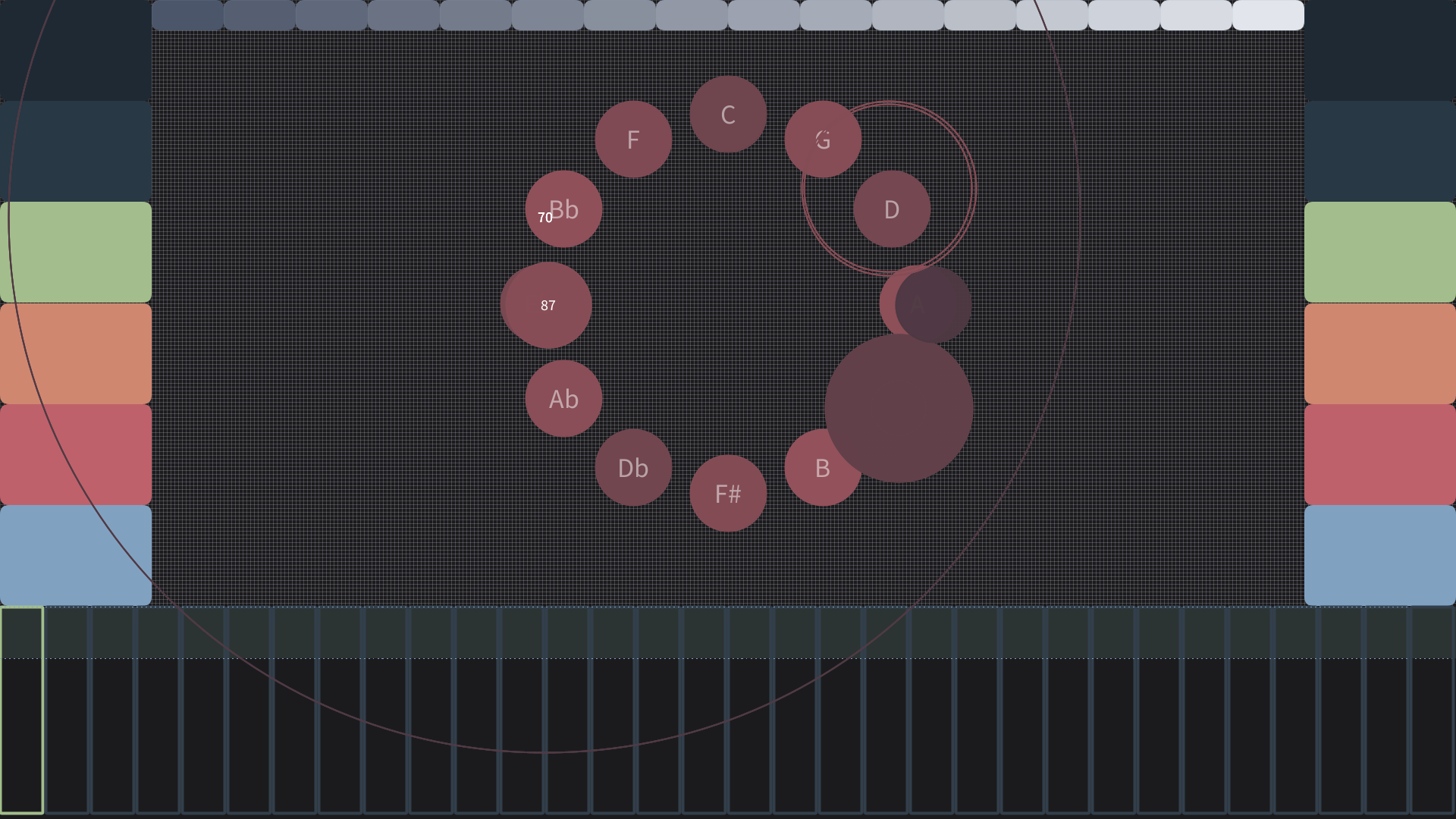

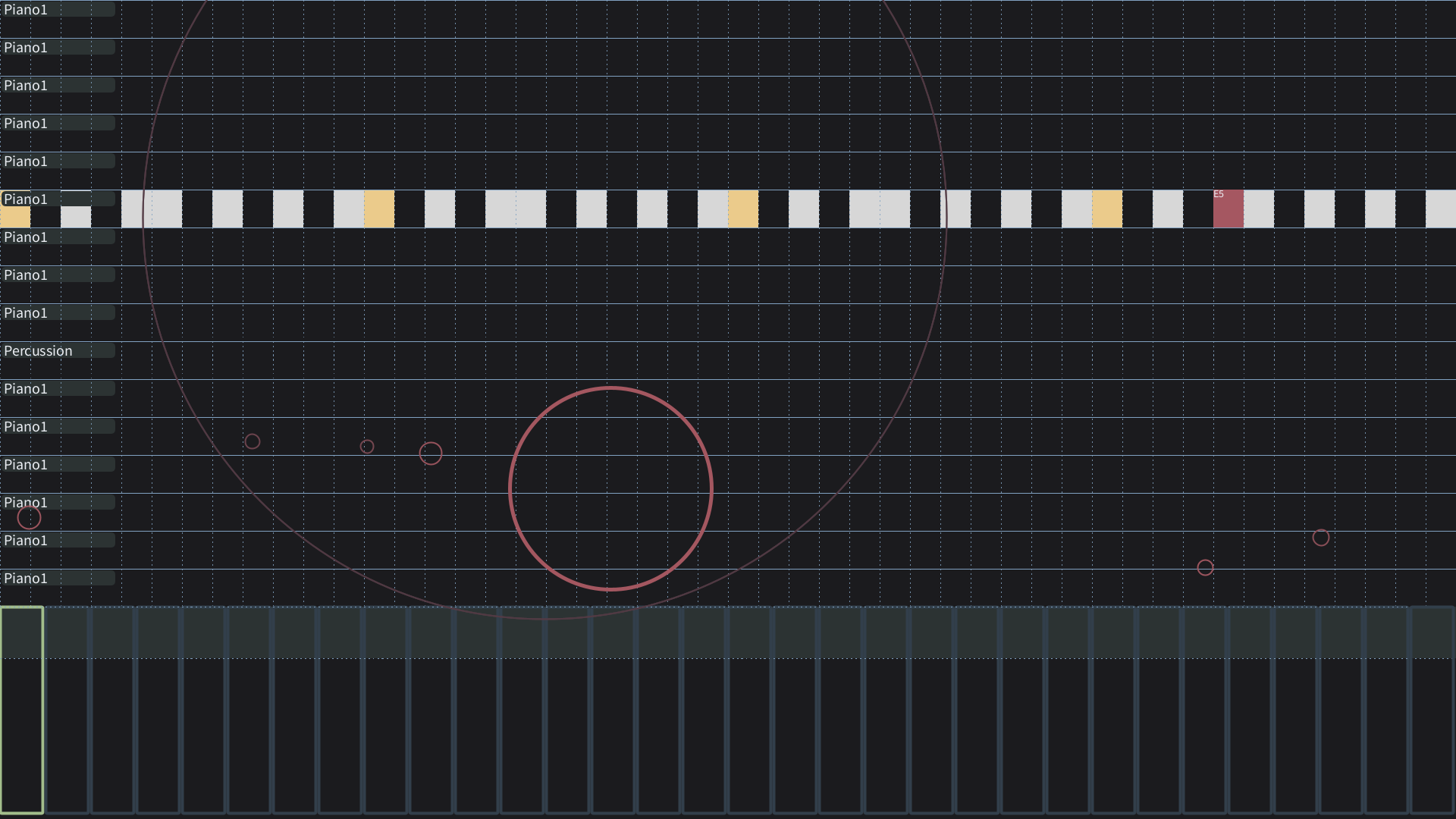

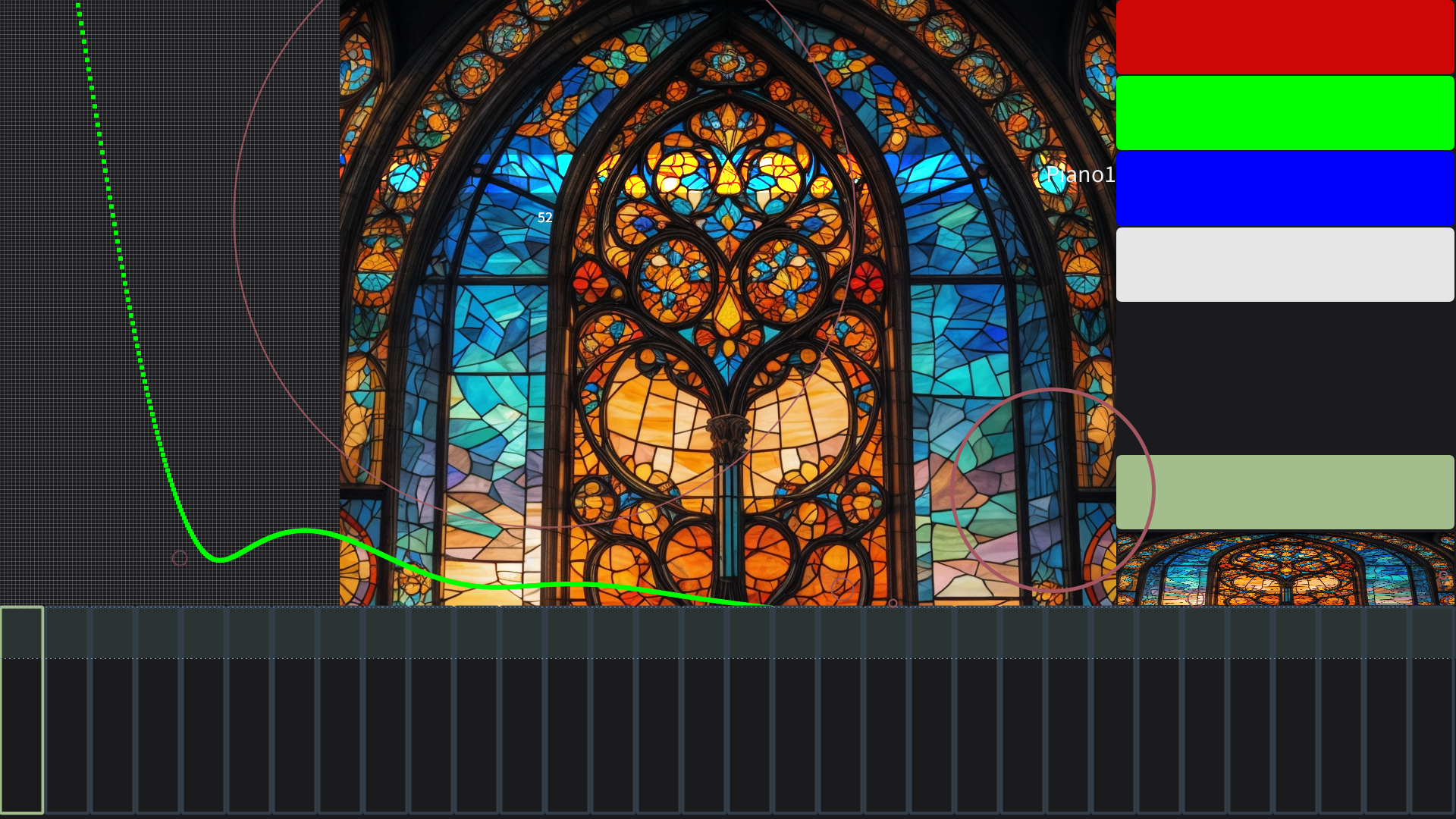

An interactive Processing sketch that merges generative sound and visual systems into a responsive, multisensory instrument. Designed to work with both mouse and touch input, the piece responds to tapping and dragging gestures, allowing participants to actively shape rhythm, melody, and visual form through direct interaction.

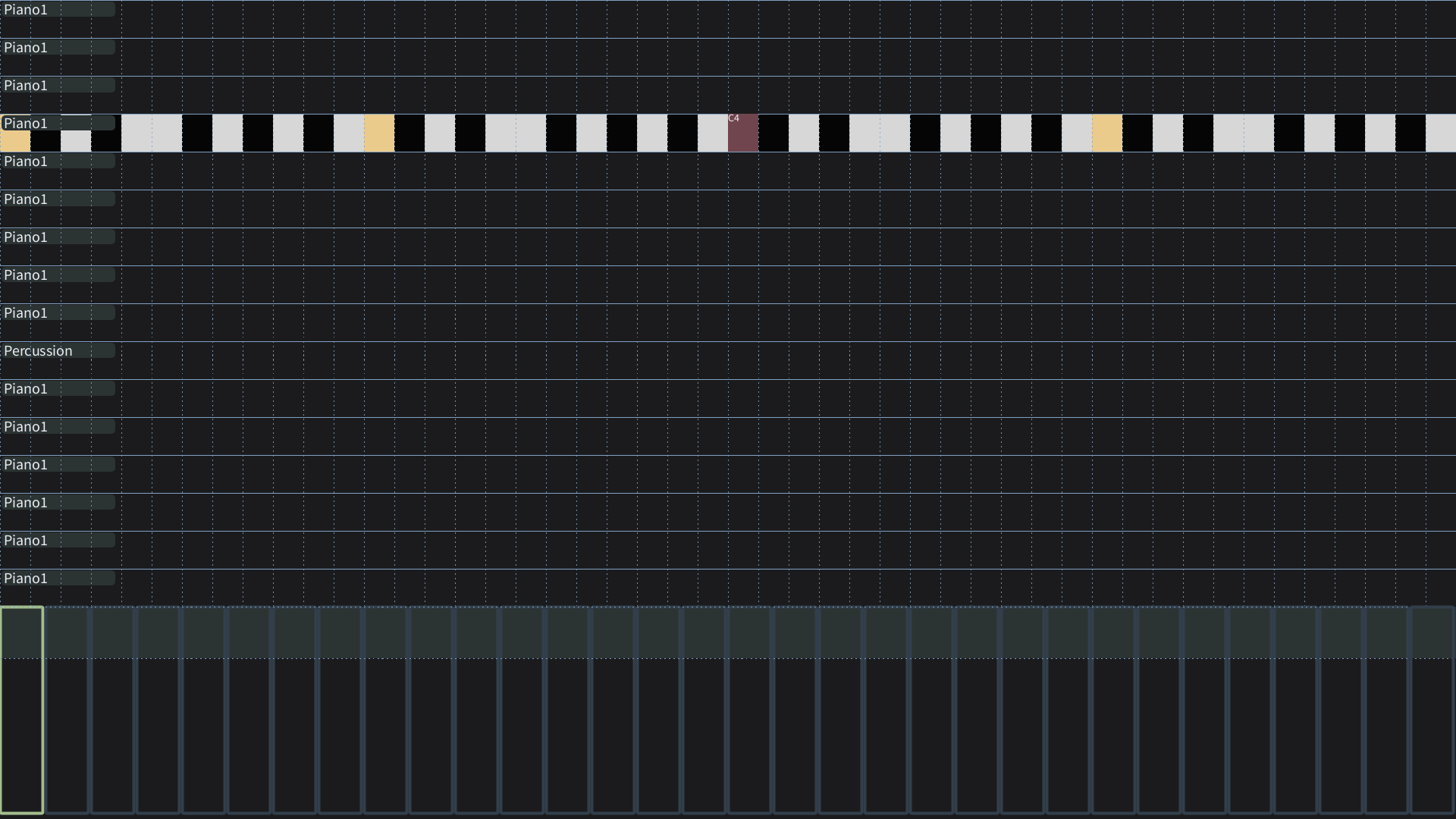

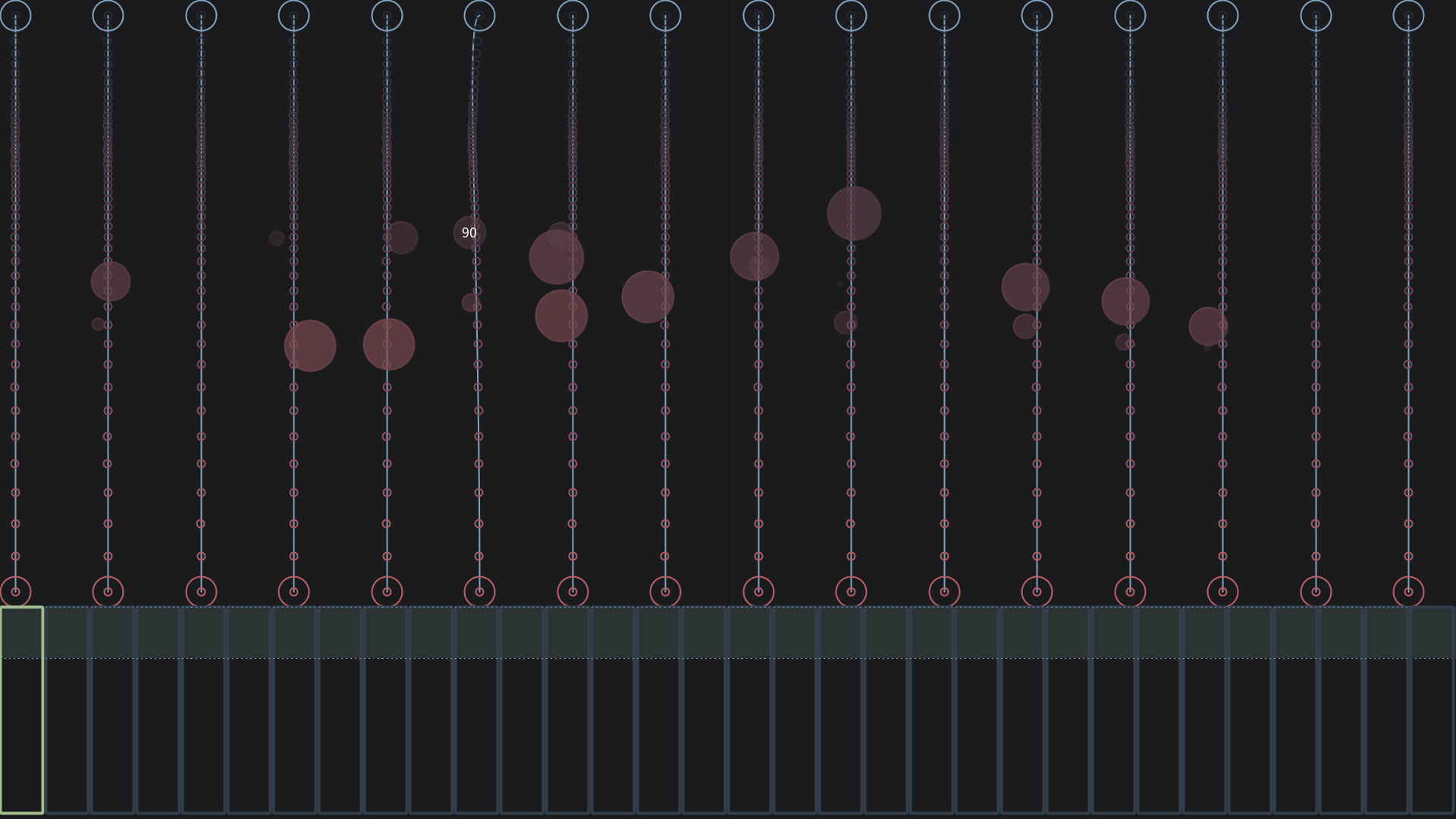

The system features a custom MIDI sequencing and playback engine built using the Java MIDI library, including synthesizers and the MIDI sequencer. User interactions are recorded into looping sequences that employ quantization, ensuring rhythmic coherence while still allowing expressive, improvisational input. As sequences evolve, audio events remain tightly synchronized with generative visuals, creating a cohesive audio-visual performance space.

To support rapid experimentation, I designed an object-oriented framework that abstracts away low-level MIDI concerns such as timing, playback, and device management. This structure made it easy to prototype and explore new “synestrument” ideas without rewriting core sequencing logic, enabling fast iteration on interaction models, sound behaviors, and visual responses.

The result is an algorithmically driven composition environment that explores the relationship between sound, code, gesture, and visual form, emphasizing play, discovery, and real-time feedback.